Disruption is the new normal! – This happens to be the current motif of the AI landscape. DeepSeek has posed some serious challenges to the conventional approach to AI model development.

It has proved, at least looking at the current scenario, that there is no need for massive capital expenditures on training the LLMs. In fact, the advancement of AI isn’t just about the amount of data you put in, but how well you can prompt it! This reminds of a talk when asked where an Indian startup with limited funding could build something substantial in the AI space, Sam Altman’s response was that it is “totally hopeless” for them to try to compete on training foundation models. But now DeepSeek has emerged with a low-cost, innovative approach that challenges this very notion.

Rookie to Dethrone OpenAI and Gemini

I would like to give a huge shout-out to Carey Lening for investigating of DeepSeek, an interesting piece, incase you wanna have a look – A Deep Dive.

Now coming back to my post, Tom Bilyeu in Impact Theory came up with interesting insights as to how the rookie is all set to dethrone giants like OpenAI and Gemini, following are the five major takeaways from his podcast:

1. Instead of depending on huge data sets and costly training techniques, DeepSeek cleverly pulls insights from existing AI models like ChatGPT.

How?

Its like how we use ChatGPT to access info.

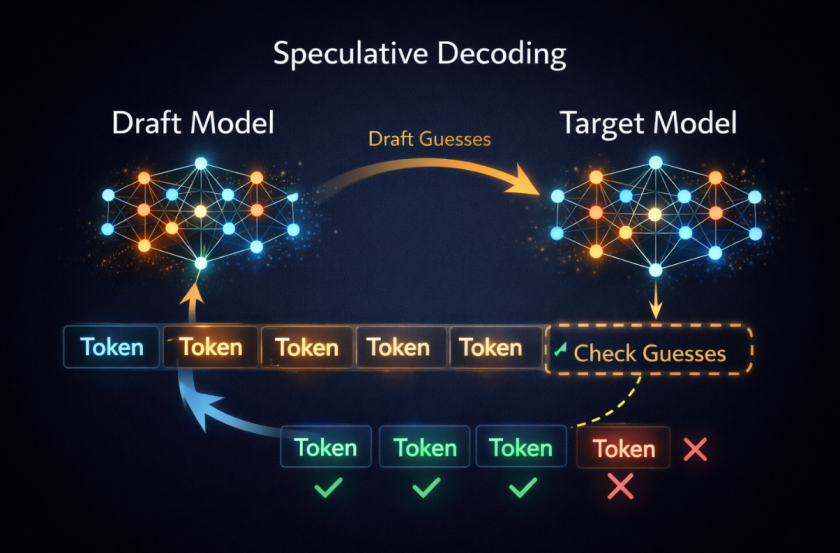

Generally, LLMs require massive datasets, learning patterns, facts and nuances from the information; all these together enable the model to generate accurate, contextually relevant responses and understand complex queries. DeepSeek leveraged all this by asking the right set of questions or statements. With the help of right prompts, the LLM was able to dig-in and collect insights from the internal structure of the model without retraining, thus, making it highly efficient in generating accurate responses.

2. LLMs require huge amounts of computational power to train and GPUs stand here as the most handy tool. They are the playground for intensive computational tasks required for training the AI models. With DeepSeek into picture, training models from scratch could become a thing of “past”. The new models could just extract the knowledge from the source (such as ChatGPT) via prompts, consequently, the role of GPUs may not be required as heavily. However, their role might evolve to focus on enhancing efficiency in data retrieval and model fine-tuning.

3. Since LLMs have already been trained on large and diverse data-sets, DeepSeek and similar tools will work well. However, when it comes to specialized fields such as physics, chemistry and biology, the general-purpose knowledge in existing models may not be sufficient to provide the depth and precision which is critical to achieve breakthroughs. Specialized domains would require thorough understanding which can only be possible with intensive training in the specific subject.

Eventually more intensive training will be required to fine-tune AI models for specialized applications. Therefore, combination of both methods – DeepSeek’s prompt based approach coupled with intensive training with domain specific datasets, could become the next trajectory in the AI space.

4. Companies that might think to invest in specialized fields like physics but with a technology like DeepSeek they might find themselves at a competitive disadvantage. This could definitely create some apprehension to invest in companies that are pouring resources into building physical infrastructure. After all, with the rise of more efficient alternatives like DeepSeek, investing in expensive infrastructure for training specialized models will become a risky bet for companies.

5. There’s a growing speculation about the motivations behind its launch. Some are of the view that it is a strategic response to U.S. restrictions on advanced semiconductor exports. By developing DeepSeel, China might want to prove that AI innovation can happen without cutting-edge chips, referring of course to Nvidia.

Whatever is the case, Tom said, this is a wake-up call for the U.S. in the global AI race. Most importantly, if China can reach similar levels of AI through better software, smarter algorithms and different ways of computing, then limits on hardware might not be as helpful in slowing down progress as we first believed.

So, what next?

As of now, the emergence of DeepSeek not only challenges conventional AI development but it could also shake up the market dynamics and raises geopolitical stakes. Not to miss, the uncertain future for AI investment. Who knows, this could be the new chapter in the race of AI development and dominance! – which makes us think: Can the established leaders keep up, or will new challengers change the game?