Hyperdimensional computing (HDC) is the budding computational paradigm based on cognitive model which distillates to higher dimensionality and randomness.

Cognitive modelling refers to simulation of human brain in terms of problem-solving and mental processing. Artificial intelligence is the result of cognitive modelling at play.

Taking a step ahead, hyperdimensional computing enables AI systems to hold onto memories and analyse new information. Like the human brain, neurons to be more specific, HDC compare, contrast and process data by integrating current and past information.

Processing new data with relative to previously encountered info is the new computational paradigm from the conventional computing point of view.

In the previous HDC systems, at one point of time, only a particular task was performed, like natural language processing (NLP) or time series problems. However, the new HDC systems are able to carry out all core computations in-memory.

Natural Language Processing

Natural language processing or NLP is an AI tech, which bridges human interaction with computers. It generally entails decoding human language data in a form that machines can understand. A few NLP examples are:

- Siri, Alexa or Google Assistant

- Voice text messaging

- Autocomplete

- Autocorrect

- Machine Translation

- Customer Service Automation

NLP is a customer centric technology with an aim to read, interpret, comprehend and make sense of human language.

Time Series Problems

Time series problems involve continuous observations for predictive analysis. It could be minutes, an hour, day, month or year. Time series forecasting & modelling are the most important tools in data analysis.

It helps in facilitating decision making. Some of the areas where time series forecasting is used are:

- Predict stock prices

- Trading strategies for investors

- Temperature

- Rains

Data points in time series could be a single variable, called univariate. Or multivariate, time series containing records of multiple variables.

Joint research between ETH and IBM

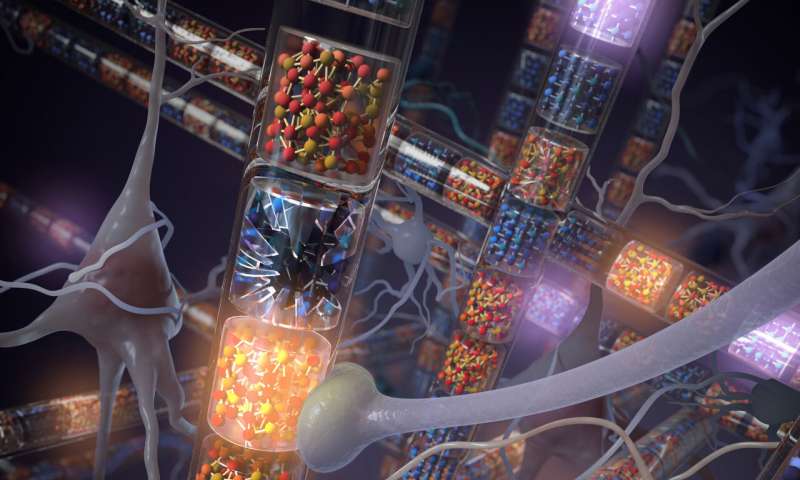

Current work in the study aims at converging the two concepts – in-memory computing and hyperdimensional computing – into a completely new and single computational paradigm.

As per the lead researchers, at IBM Research Zurich, in-memory computing platform is being built using phase-change memory (PCM). While at ETH Zurich, they are working on hyperdimensional computing paradigm mimicking human brain.

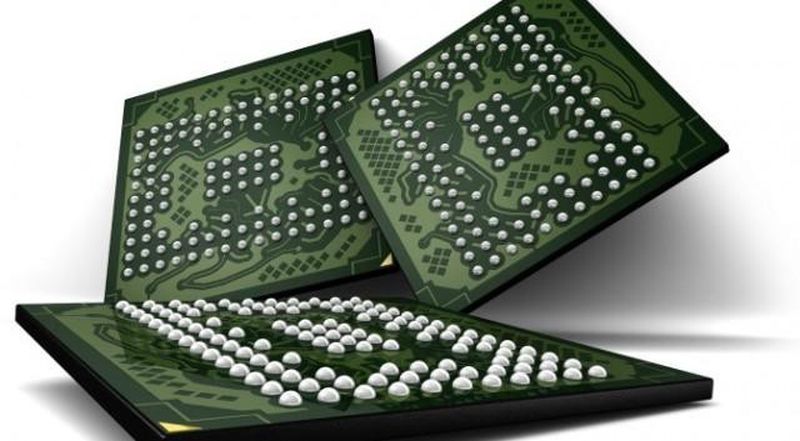

What is a phase-change memory?

Phase-change memory is a type of non-volatile computer memory that does not lose content or data when power is lost. It also stands for PCM, NVRAM and PCRAM.

It is because of its superior performance characteristics it is also referred to as perfect RAM.

It not only promises faster RAM speeds, but can be used to store data with low power requirements.

Phase-change memory does not require to delete data first to write cycles. It can manage more write cycles with respect to its NAND counterpart. Additionally, it is not only capable of executing code like the existing DRAM but it also has the ability to store data like NAND.

Therefore, technology behind PCM offers faster performance and enhanced durability than the already existing flash storage devices.

Tailor-made combination improves efficiency

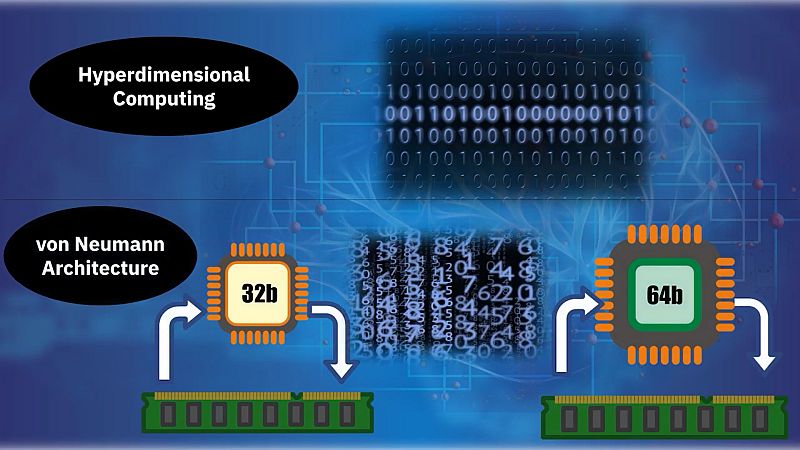

This tailor-made combination from the two concepts, eclipses the von Neumann bottleneck as well. It is a design architecture for a computer and also known by the names of von Neumann architecture or von Neumann model or Princeton architecture.

According to the von Neumann bottleneck (VNB), a computing system has limitation because of its insufficient rate of data transfer between memory and the CPU. This results in CPU to wait as it is idle since it is not being used to its full capacity. Consequently, as a user level, all application will run slow.

The new combination, improves energy efficiency against the lag, noise and failures of the conventional computer architecture.

Computing with hypervectors

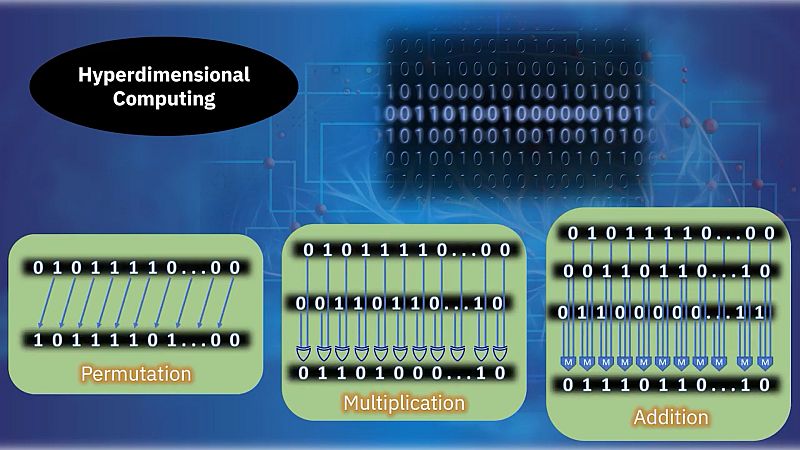

To carry out all core computations in-memory, HDC systems are built on the rich algebra of hypervectors.

Hypervectors are high- dimensional (where D = 10,000) vectors that are independent and are identically distributed components.

In computing with hypervectors all operations are implemented using algebra on vectors. New representations are computed from the existing ones thus arriving at a result much faster than gradient descent.

Gradient descent is an optimization algorithm that finds the ideal value of variables, at a given time, based on the historical data. Not only it aids in reaching conclusions faster but also helps in reducing prediction error.

With the help of these hypervectors, HDC is able to create robust computing systems that can finish sophisticated cognitive tasks. Some of these functions include:

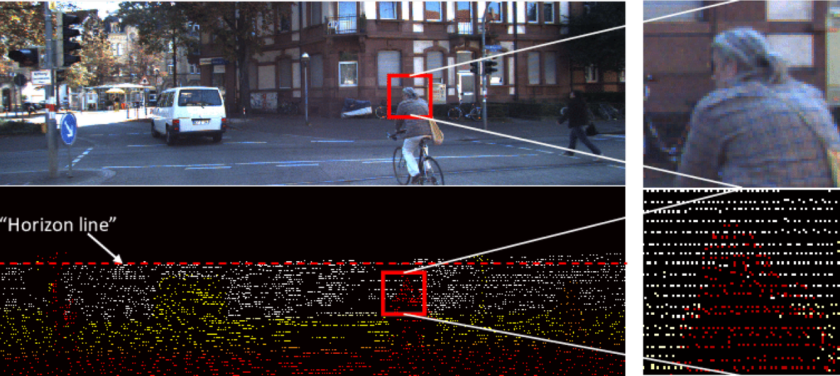

- Object detection: it is a challenging task as it incorporates image classification and object localization. Also, it creates a bounding box around an individual object of interest within image and assigns them a class label.

- Language recognition: the task enables computers to recognise any language without pre-learning.

- Video (and voice) classification: it includes combining multiple channels of images. Then pooling the image layers – layer by layer. This will help in reducing the size of the image or frame field, and finally pass the results to fully-connected layers.

- Time series analysis: it involves collection of data from continuous observations for predictive analysis. This helps in recognizing trends, cycles and in the forecasting of a future event.

- Text categorization: it is the task of allocating predefined tags or categories to free-text documents.

- Analytical reasoning: it involves analysing results through logic. Additionally, it gives preference to find patterns or make inferences.

Researchers added that due to the intrinsic sturdiness of HDC, their new system can achieve core computations in-memory with logical and dot product operations on memristive devices.

Largest PCM-based in-memory HDC platform

Until now, most of the in-memory HDC architectures created were applicable to a limited set of tasks like single language recognition. However, the new HDC system utilizes nearly 700,000 PCM devices. This happens to be one of the largest in-memory HDC architectures fabricated so far.

Researchers envision that their largest PCM-based in-memory HDC platform would unease an era of technology that will be embed with advanced memory capabilities.

It might play an important role in AI where it’ll deliver systems that can learn fast and like humans, efficiently retain information throughout their ‘lifetime’. Its performance will be further evaluated ones the system is tested in real world settings.

Via: Kumudu Geethan Karunaratne, IBM Research Blog