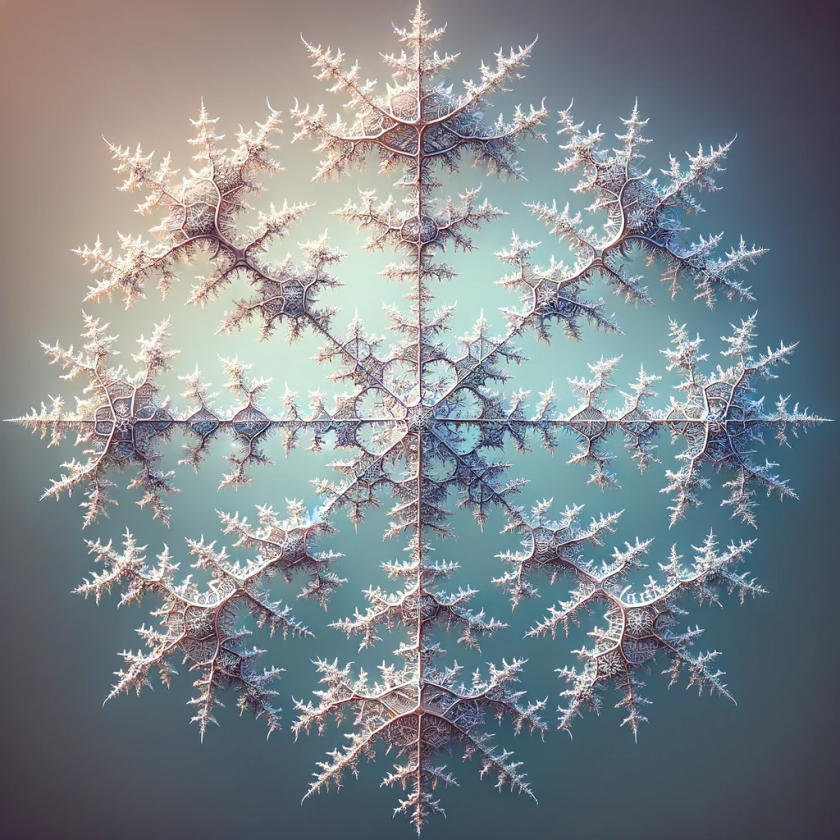

James Gleick, one of my favorite science writers, wrote this famous quote in his book Chaos: Making a New Science:

“IN THE MIND’S EYE, a fractal is a way of seeing infinity”.’

Doesn’t it also reflect how LLMs work?

How? Just like fractals, these models take patterns, layer them and build something infinitely complex from simple rules. It’s like staring into a never-ending web of words, where meaning keeps unfolding the deeper we go.

Of course, fractals and AI training are not related at all. One is a beautiful pattern that we see in nature, and the other is an advanced tech that helps run chatbots, search engines and generative writing tools. I don’t know why, but I love connecting different subjects coz, I feel, more or less, each one is moving towards a deeper understanding of patterns and complexity. Each has a common denominator, which is, the search for structure, meaning, and underlying rules that shape our world, be it math, science, language, literature or art. At least, that’s how I see it!

The Fractal Nature of AI Training

Imagine you want to teach an AI model how to generate human-like text. You don’t just feed it a bunch of words and expect it to “get it” immediately. The process is iterative. I like the word iteration, it sounds like a fancy way of saying ‘try again, but smarter this time. 🙂

Anyways, the model starts with random predictions, gradually refines them, and, over time, builds a deeper understanding of patterns in language. Just like how a fractal unfolds from a simple equation, an LLM grows from a set of fundamental learning principles.

Here’s how this works, step by step:

Step 1: A Simple Starting Pattern

A fractal begins with a seed shape, which could be a line, a triangle or maybe a curve. The same is true for training an AI model. At the very beginning, an LLM is just a big network of connections that haven’t been trained yet. It has a lot of possibilities, but it doesn’t know anything yet.

For example, it’s like teaching a child to read. You start with the alphabet, then move on to simple words. An AI model starts in a similar way, it sees raw text data but doesn’t understand meaning yet.

Step 2: Iterative Growth Through Training

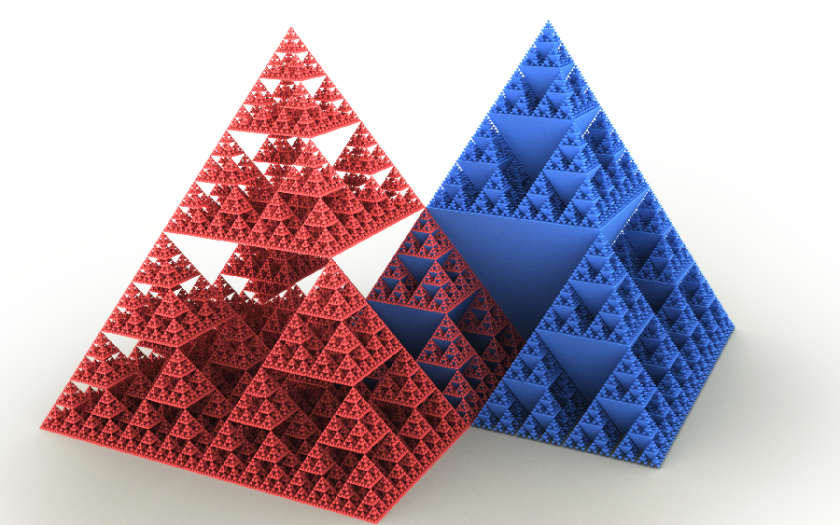

Fractals develop by applying the same rule, repeatedly. The famous Sierpiński triangle (shown above) for instance, is created by taking a simple triangle and removing smaller triangles from its center again and again. Each new iteration adds more detail.

LLMs grow in complexity through a similar process. During training, the model repeatedly processes vast amounts of text, changing its internal setup to learn and identify patterns. It doesn’t just “memorize” sentences, like we used to do in our schools, rather it finds relationships between words, grammar rules and even deeper contextual meanings.

The process is very much similar to learning a new language. The first time we hear a sentence from a foreign language, (for me it’ll be French or Spanish) it might seem meaningless. However, as we expose ourselves to more sentences, the words and tone start to make some sense. Our brain begins to recognize patterns, and LLMs learn in much the same way, just at an immense scale.

Step 3: Refinement Through Feedback

In fractals, each zoom-in reveals more detail. In AI training, fine-tuning does the same. If a model starts generating text that is slightly off, researchers refine it by adjusting weights or using reinforcement learning with human feedback (RLHF).

Imagine teaching a student to write an essay. At first, their drafts might be rough. But with each round of feedback, which could be “Use stronger verbs”, “Avoid repetition”, “Clarify your argument”, “Shed some examples”, “Write in passive mode”, their writing improves.

AI models go through this same process. Trainers guide them by reinforcing useful outputs and discouraging mistakes, while at times, variations in prompts generate much better results, the entire process is like an artist refining the tiny details of a picture as they get closer.

Step 4: Scaling Up to See Emergent Complexity

If we zoom out on a fractal, we see a breathtakingly intricate design, one that wasn’t immediately obvious from its small beginnings. LLMs, when scaled up, show emergent abilities that weren’t explicitly programmed.

For instance, smaller AI models might struggle with abstract reasoning, but as they grow larger (with more parameters and more training data), they start developing surprising skills, which includes understanding humor, translating languages with little prior training, and even explaining complex topics. It’s almost like TARS from Interstellar, not real (yet), but you can imagine what it would feel like if an AI could crack jokes and break down quantum physics while effortlessly connecting the pod with the Endurance in a high-stakes docking maneuver.

Just as a fractal’s beauty becomes clearer at larger scales, an AI model’s intelligence becomes richer when it has been trained extensively. Kind of like how thousands of small steps somehow add up to finishing a 42-kilometer marathon. It feels impossible at first, but before you know it, you’re crossing the finish line, totally exhausted but amazed and completely overwhelmed!

What Happens When Growth Goes Wrong?

Fractals can sometimes become distorted if parameters aren’t set correctly. Similarly, LLMs can generate biased, incorrect or nonsensical outputs, also known as AI Hallucinations, if their training data is flawed. This is why AI development isn’t just about throwing more data at a model, as a matter of fact, it requires careful balance and monitoring along with ethical considerations.

Think about someone who learns only from a single source, let’s say, reading one type of book or hearing only one perspective/one side of a story. Their understanding of the world or the situation will obviously be skewed. AI faces the same risk: if it’s trained on biased or limited data, it will develop distorted patterns, just like a badly formed fractal.

AI Training: Like a Fractal, the More You Look, the More There Is

Understanding the fractal-like nature of AI training helps us appreciate why models behave the way they do. It also explains why AI isn’t static, it’s always evolving, just like a growing fractal.

It also means that the future of AI isn’t just about bigger models. It’s about training more effectively, improving quality, and making sure that as these models grow, they do so in a way that benefits everyone.

Iteration: The Secret Ingredient Behind Growth and Complexity

At its core, AI training, like fractals, is about simple rules, repeated over time, leading to something extraordinary. From a raw dataset to a model that can understand and generate human-like text.

So the next time you see a swirling fractal design, think about how AI models learn. Whether it’s a tree branching out, a coastline forming its jagged edges, or an LLM improving through feedback, the underlying principle is the same:

Growth happens through iteration. Complexity comes from simple beginnings. And the more we refine the process, the more powerful the results become.