If I ask you, what scene in The Matrix that’s always stuck with you, I’m sure most of us would say, the bullet-dodging or the red pill. But for me it’s when Morpheus tells Neo that what he thinks is real is actually just electrical signals interpreted by his brain.

“What is real?” he asks. “How do you define real?”

That question has the power to raise existential questions about perception & consciousness. I have also been thinking about it, but from a different angle, that is, what if we stopped trying to make computers think like brains and instead what if they could think like… light?

I know that sounds absurd because light doesn’t think. It just moves, refracts, diffracts, that is, obeys laws of physics as we know it, without question or hesitation. But that’s exactly the point.

What if the future of computing isn’t about making processors faster or cramming more transistors onto silicon wafers, but about surrendering control or handing computation to something that already moves at the ultimate speed limit of the universe?

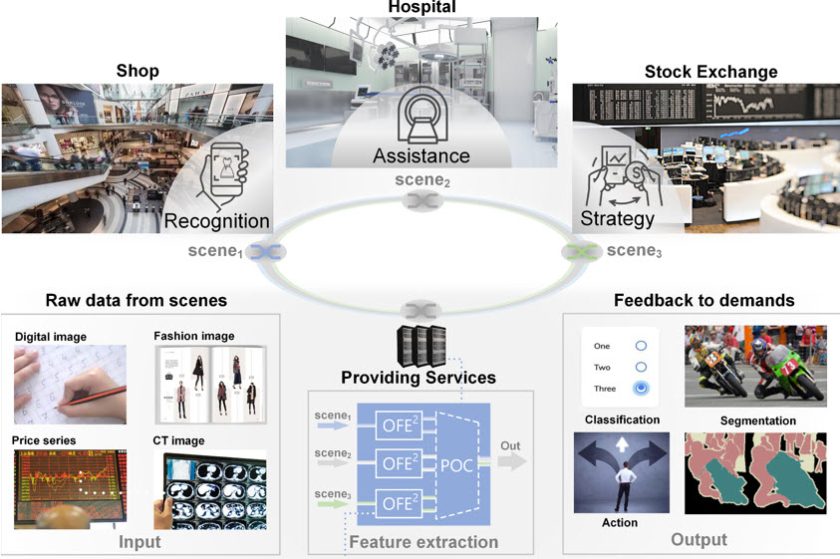

The seeds of this idea were sown last weekend, when I came across something called OFE² – an Optical Feature Extraction Engine, developed by researchers at Tsinghua University.

The name does suggest some cyberspace fantasy but it’s a real chip, operating right now in a lab, processing data not with electricity but with light itself. Its integrated diffraction and data preparation modules makes it much faster and more efficient for tasks involving AI.

Just reading about the tech itself feels like we are entering into an era where everything is about to change fundamentally.

This idea was further reinforced yesterday while I was watching Blade Runner 2049. Remember that scene where K interacts with a giant holographic AI. The whole interface is light, with beams and particles and volumetric displays. There was no visible hardware or whirring fans. Just light doing… whatever light does in Denis Villeneuve’s imagination.

Now think about doing similar stuff but at nanoscale and in the real world!

When Computing Was About Electrons

Let’s first see how traditional computing works. Every processor operates using a clock, where in, a steady stream of timing signals flow that synchronize its actions. For example, a 3 GHz processor performs 3,000,000,000 clock cycles per second, and each operation requires one or more of those cycles to execute.

It’s like having the fastest assembly line in the world, but every single widget still has to pass through every single station in sequence.

Electrons have to physically move through transistors, which means those transistors have to flip states. That process generates heat, and more heat means you need more cooling. Cooling requires energy, and energy costs money, and generates even more heat. It’s this circular problem we’ve been managing for decades by making everything smaller and more efficient.

This trend was famously captured by Moore’s Law, which observed that the number of transistors on a chip would roughly double every two years, giving us exponential improvements in performance and efficiency without fundamentally changing the physics. But now, we’re running out of room to shrink. We’re bumping up against the physical limits of how small a transistor can be before quantum effects start playing havoc with our calculations.

In The Three-Body Problem by Liu Cixin, there’s a civilization that builds a computer out of 30 million soldiers standing in formation, holding flags. When they want to compute something, the soldiers move according to predetermined rules. It’s brilliant and ridiculous because it’s basically what our computers do. We’ve just replaced soldiers with electrons.

But light isn’t bothered by these issues at all. It just keeps going.

When Light Becomes the Processor

When I first started reading about OFE², I had to read the description four times before it clicked. This chip, and calling it a chip feels almost wrong, doesn’t compute in the traditional sense. It doesn’t execute instructions, and there’s no fetch-decode-execute cycle. Instead, you shine light into it, and the light itself, just by traveling through carefully designed structures, becomes the computation.

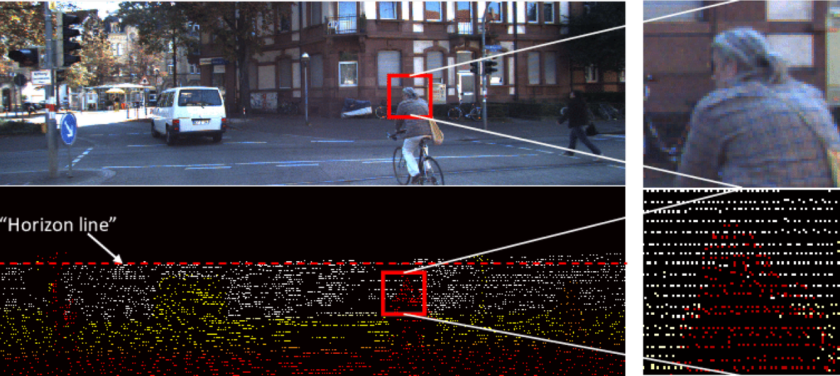

Let’s say, you want to find the edges in a picture, basically the outlines of objects, like where a face ends and the background begins.

Normally, a computer has to look at every single pixel in the image, compare each one to nearby pixels, do a few calculations, and then decide where the edges are. It’s like going through the whole picture with a magnifying glass and checking tiny points one by one. That takes time and energy.

But with OFE², you don’t do all that step-by-step work.

Instead, you turn the picture into patterns of light and shine it through a special chip. And when the light comes out the other side, the edges are already there, no extra math, no waiting. The chip and the light do the work as the light passes through.

It’s similar to printing a sheet of paper with a scenery on it, sliding it through a slot, and it comes out the other side with all the outlines already highlighted, and within no time.

There’s another interesting thing in the research, the core latency is under 250 picoseconds.

Do you know how short a picosecond is? It’s to one second what one second is to 31,710 years. Light travels about 0.3 millimeters in a picosecond. This chip is doing meaningful computational work in the time it takes light to cross the width of three human hairs.

This shows that calculation here is occurring at the speed of causality itself.

From Gigahertz to Picoseconds, A Different Scale of Time

Let me explain how fast and powerful this is, because the results are seriously unbelievable. This new OFE² chip runs at 12.5 gigahertz, which means it’s already as fast as some really advanced computer hardware.

Normal computers need thousands of tiny steps to find features in an image, like edges or textures. This optical chip does it in one step, light goes in, passes through the chip, and comes out already processed. Done. Just like that.

The whole thing takes less than 82 nanoseconds, that’s way faster than many of the fastest special-purpose chips we have today. And the plus point is, it’s not some giant power-eating machine either. It’s super-efficient, doing around 2 trillion operations per second for every watt of power it uses. Each operation uses an incredibly tiny amount of energy, which is around 9.7 picojoules (that’s unimaginably small).

I tried to find a good analogy for this, and the best I could come up with is this, if electronic computing is like mailing letters (fast, but still requires physical transit), optical computing is like telepathy. The answer appears almost the instant you ask the question, because the question and answer are the same event, just light being light.

Computation at the Speed of Causality

The research is not limited to just labs and this is where my perspective on what’s possible really shifted. The team fed it with images, and not the regular images but those which machine learning researchers use all the time, that is, MNIST (handwritten digits), Fashion-MNIST (clothing items), even medical CT scans of liver tissue.

The optical engine extracted features from these images and when those features were fed into neural networks, the networks performed better than they did with conventional preprocessing.

If we talk about performance metrics, MNIST accuracy improved by 2.5%. Fashion-MNIST by 3.6%, while Liver segmentation by 1.1%.

Even though the machine-learning systems they tested were already very good and optimized, they still got noticeable improvements in accuracy just by changing the preprocessing step from digital (computer-based) to optical (light-based).

So instead of a computer cleaning and preparing images before the neural network sees them, this new optical device did it using light. That small change helped the neural networks “see” patterns better, which means the optical system picked up details that normal computer methods missed.

When the Tool Reveals More Than the Designer

This reminds me of a short story by Ted Chiang called “Exhalation”. The beings in the story realize their world works through air moving around, and by studying how the air flows, they figure out more about their own minds. That’s how I felt reading about these optical features.

The researchers built a tool to find out edges in images but it revealed much beyond that, it’s basically about discovering that there’s more to visual information than we initially focus on.

It’s like saying a painter who typically uses only black and white to create sharp outlines around objects. One day as he was experimenting with colors, he realised that different colors can reveal new shapes and details that he couldn’t see before. This changed his complete definition and understanding of what he’s been painting.

The same happened in machine perception and optical computation. We assumed we are the designers looking down on a system. But in those brief moments of surprise, the perspective takes a different turn. We become observers of physical laws expressing themselves through our tools. For instance, we could see:

- the autonomy of the substrate (light isn’t following the algorithm, in fact, the algorithm is borrowing light)

- the humility of engineering (we guide complexity more than we command it)

- the porous boundary between intention and discovery (design leads us somewhere, but never alone)

It’s a reminder that insight often comes not from enforcing our models, but from noticing where they fail.

At times, our logic doesn’t fit into the compartments that we create, so can we say, laws of physics themselves seem to have a mind of their own? And we’re not the only intelligence out there.

When Markets Move Faster Than Thought

AI applications are also meshed into critical real-time systems, ranging from surgical robotics to high-frequency financial trading, and they rely on the ability to rapidly extract key features from continuous streams of raw data.

Instead of flowing effortlessly, this capability is increasingly bottlenecked by traditional digital processors because of their physical limits. They struggle to deliver the latency reductions and throughput improvements demanded by emerging data-intensive services.

With this line of thought, the team performed a trading experiment.

They applied OFE² to high-frequency trading signals, specifically, gold futures. They fed in price data, and the optical engine detected pattern changes, generated trading signals, and after some tuning, achieved a profit metric of 6.02 on test data. Now, I’m not a finance person, but I know enough to understand that in high-frequency trading, latency is everything. Microseconds and nanoseconds matter.

With an 82-nanosecond total latency, theoretically, it is possible to make trading decisions faster than any electronic system. With this tool, you can react to market movements before the rest of the world even knows those movements have happened.

Let’s say if this starts happening in the real world, then imagine the systems operating on timescales where human oversight becomes literally impossible. Where decisions happen faster than neurons can fire.

At that point, markets are no longer driven by human intuition or strategy, but by machine logic unfolding at incomprehensible speed. And once machines begin shaping reality faster than humans can perceive it, the world enters a domain that operates beyond our cognitive horizon.

What Does This Look Like in The Real World?

Now, here’s food for thought, if this technology scales, and the researchers say it can, especially on silicon-nitride platforms, then we’d be looking at a fundamental shift in where and how computation will happen.

Smartphones, when embedded with an optical preprocessing layer, transform the moment the camera captures an image. Before a single transistor fires, light itself has already extracted the key features, that is, faces, objects, entire scenes. All the heavy lifting that today burns battery and heats up silicon happens passively, in the optical domain. There will be no impact on power, which means the battery lasts twice as long. And the phone stays cool to the touch. The AI capabilities that currently feel like premium, resource-intensive features will become instantaneous and effectively free.

There could be an interesting use case in medical imaging too. Right now, when we get a CT scan, there’s this gap between image acquisition and analysis. A radiologist has to review the images, look for anomalies, and make judgments. But what if the imaging device itself, using optical computing, could highlight potential tumors in real-time?

Let’s keep diagnosing out of the equations because that should require human expertise, but instantly drawing attention to areas that deserve closer examination.

Autonomous vehicles could process visual data with microsecond latency. That’s the difference between hitting the brakes and not having time to react. Between seeing a pedestrian and not seeing them. With this level of responsiveness, cars transition from passive observers to active guardians of the road. Split-second intelligence becomes not just an advantage, but a necessity for true safety and trust.

Edge AI devices could operate on microwatts of power because they wouldn’t be computing rather they’d just let light do what light does. Instead of forcing electrons through silicon, they’d lean into the natural physics of photons. This means sensors and processors that run almost passively, like eyes that never tire. And the result of all this would be outstanding, that is, intelligence everywhere. From wearables to wildlife trackers, everything operating without draining batteries or the planet maybe.

This Isn’t the End of Silicon, It’s a Shift in Power

At first glance, the overall trajectory of OFE² seems like it’s here to shake up GPU dominance. However, the device isn’t here to replace GPUs. In fact, this device speaks to a different future, something like:

- Compute where data lives

- Process in real-time

- Run on physics, not just silicon logic

The point is that for decades, we’ve been in an arms race with physics, trying to make electronics faster and denser, towards better efficiency. And physics keeps pushing back, with heat, with quantum tunneling, with the absolute limits of how fast electrons can move through matter.

The Universe Has Been Computing All Along

I think about Contact, one of my favorite films, also based on a book written by Carl Sagan. The protagonist, Ellie Arroway travels through a wormhole and experiences something that feels like hours but measures as just a few seconds of static. When she tries to explain what happened, nobody believes her because it seems impossible. But she has proof, all that recorded static is actually data, if only someone knows how to read it.

That’s how I feel about optical computing. We’ve been looking at the light wrong this whole time. We thought it was just for transmission, including fiber optics & laser communications. But light itself can be the computer. The calculation doesn’t happen with light, it happens as light.

And maybe that’s the lesson here. Maybe the future of computing isn’t about building better machines to think for us. Maybe it’s about recognizing that the universe has been computing all along, in the propagation of waves, the interference of fields, the interaction of particles. We’re just now learning to read the output.

The Next Chapter

So where do we go from here? I don’t know. In fact, no one knows for sure and that’s what makes this exciting.

But I can tell you what I’m watching for:

- The first smartphone with an optical preprocessing chip.

- The first autonomous vehicle with optical feature extraction.

- The first medical imaging device that highlights tumors in real-time.

The first moment when someone realizes they can do something with light that was literally impossible with electronics.

The internet was started in the 1950s and supposed to be for academics and military communication, and now we use it to watch cat videos and build billion-dollar companies. Transistors were curiosities before they became the foundation of civilization.

What will be the trajectory for optical computing? As I said, we don’t have the answers yet.

The reality is, somewhere in a lab, right now, a beam of light is passing through a carefully designed structure, and in passing, it’s computing something. It’s answering a question. It’s solving a problem. It’s being light, and in being light, it’s showing us what computation really means.

The future doesn’t wait for us to catch up. Light doesn’t wait for anything.

But maybe, let’s be hopeful, we might be able to learn to move at its speed.

Refer to Advanced Photonics Nexus for supporting material.