In the vast realm of the Intelligent Motion Lab, where the interplay of human ingenuity and technological wonders dance harmoniously, resides Dr. Yifan Zhu. A visionary soul, Dr. Zhu finds solace in the realm of robotics, under the wise guidance of Prof. Kris Hauser. Together, they delve deep into uncharted territories.

Like a master sculptor shaping formless clay, Dr. Zhu’s focus lies in unravelling the secrets of deformable objects. Through painstaking analysis and intricate algorithms, he breathes life into these enigmatic entities, capturing their essence within a digital tapestry.

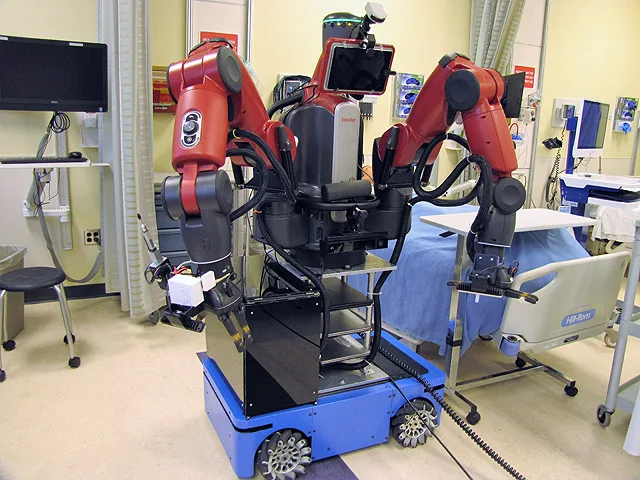

Dr. Zhu’s insatiable thirst for innovation knows no bounds. On the second front of their odyssey, he joins hands with fellow seekers of progress, forging ahead in the development of an extraordinary creation: TRINA, the nursing assistant robot.

An epitome of unity between man and machine, TRINA, stands as a testament to the unyielding spirit of human innovation.

As Dr. Zhu treads upon these remarkable frontiers, he managed to squeeze time in-between for an interview with us, please scroll down to know more about his ideas and research 🙂

What initially sparked your interest in Robotics, and what motivated you to pursue it as a career?

When I was at Vanderbilt University as an undergraduate student, I did research at Prof. Robert Webster’s lab where his main research focus is medical robotics. I really enjoyed doing research there, which motivated me to go to graduate school.

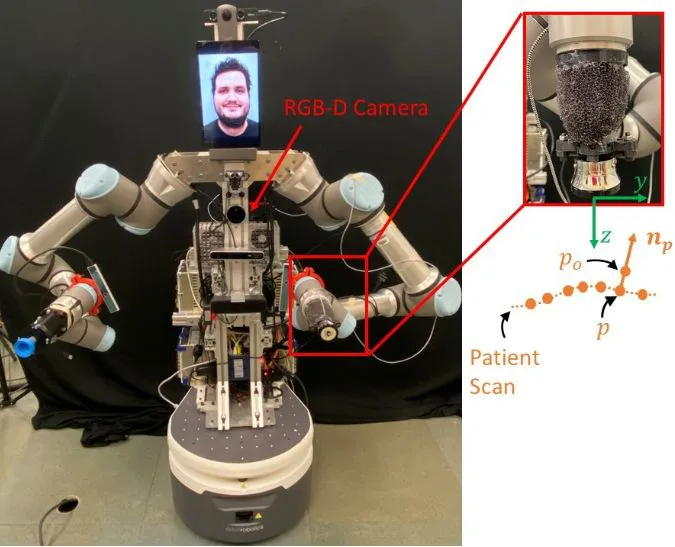

In your research – Automated Heart and Lung Auscultation in Robotic Physical Examinations, how does the Bayesian Optimization formulation help in selecting optimal auscultation locations?

We first leverage a machine learning model trained on our own datasets that estimates the sound quality of a short sound snippet.

When facing a new patient, a probabilistic model of the sound quality over a patient’s body is constructed using stethoscope recordings collected on this patient’s body, while also leveraging prior information of a patient’s visual anatomical structure.

Then based on this probabilistic model, a Bayesian optimizer picks the next location that has the best expected quality, taking uncertainty into account as well.

How does the system utilize visual anatomical cues to determine the auscultation locations?

In auscultation, the goal is to listen to the sounds of specific anatomical structures in a patient’s body, e.g. various parts of the heart.

These locations are correlated with a patient’s body surface visual appearance, and doctors in real life take advantage of such information as well.

We first build an average human 3D model labelled with the optimal sensing locations by doctors.

Then for a new patient, a 3D scan of the patient is obtained with a RGB-D camera, and deformable registration is performed to align the average human 3D model to the new patient’s 3D geometry, after which the deformed optimal sensing locations are used as initial guesses of the optimal sensing locations on this patient.

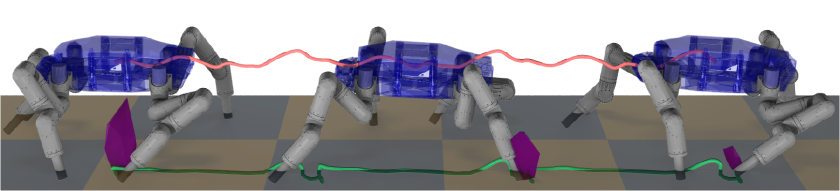

In your recent research – Robots making Autonomous Decisions on Extraterrestrial Missions, I’m curious to know how does the proposed method outperform non-adaptive methods and other state-of-the-art meta-learning methods in the excavation literature?

We do these by conducting experiments on a variety of terrains that are very different from what the robot has seen, and show from the results that our method allows the robot to quickly adapt to these novel terrains.

From the algorithm itself, we propose a novel meta-learning technique that enforces the machine learning model to experience large domain gaps during training, basically simulating what is going to happen when the robot is deployed on very different terrains.

This allows the model to be more robust to novel terrains.

What are the potential applications and implications of the proposed adaptive scooping strategy beyond extraterrestrial missions?

Our proposed algorithm has the potential to be applied to other robotics applications.

For robots to be deployed in the real world outside of factories, they will always face what is called a domain gap, where what the robot experiences are different from what it is trained on.

As a result, robots should be adaptable and constantly adjust and adapt to the ever-changing real world. Our algorithm is a strategy for robot learning in general that makes robot adapts faster.

Super excited for TRINA scoring 4-th in the finals of AVATAR XPRIZE competition. I wanted to know if there is any plan for introducing semi-autonomous functionality to the TRINA robot? And how will this functionality enhance the user experience and interaction with the robot?

Yes, and we actually already have a few semi-autonomous functionalities used at the competition, e.g. homing the robot arms and a functionality that acquires object surface textures from visual information.

We have additional functionalities that are implemented on our robot but not used during the competition. For example, one such functionality is to constrain the robot hand movement to a plan. This would be helpful when the teleoperator wants to wipe a surface or write on a whiteboard.

Various studies have shown that semi-autonomous functionalities are useful for teleoperation.

To take one step further, my colleague Patrick Naughton worked on a teleoperation system that not only implements semi-autonomous functionalities but also recommends the best function to use for novice users.

He is working on a user study that evaluates the effectiveness’s of this system and hopefully it will be published soon.

What is your take on cybernetic enhancements in the human body?

I think it would be great if it works — especially for amputees and people with disabilities. However, our technologies are just not there yet but I hope we could see this kind of technology coming to full fruition in a few decades.

What are your other interests besides CS… reading, painting, gardening, skiing maybe?

I enjoy playing basketball, reading novels, and cooking!

Someone comes up to you and says, “I wanna be just like you. I want to be a Roboticist”, what advice would you give?

Building a good foundation for math and physics. This is important as we rely on these skills daily. In addition, I would suggest that person to get their hands dirty!

There are lots of efforts into making robotics education more accessible. You can build projects using cheap hardware or simply in simulators nowadays, and learn as you go.

Quick bits:

If you were a superhero what would your powers be?

I want automatically-played BGMs haha.

Your favourite scientific innovation of the 21st century?

I am not sure — there are too many to choose from!

What will your TED Talk be 10 years from now?

Hopefully I would be able to give a live demo of my robot that does really cool stuff, on par or even better than what humans can do.

What books should I read in 2023?

Don’t really have a recommendation — classics will always be classics!

(Wow! Thank you, Dr. Zhu, it has been a real pleasure! Your work is truly an inspiration. We look forward to visit you again and see more of your innovative research. Till then, we wish you all the very best for your future endeavor.)