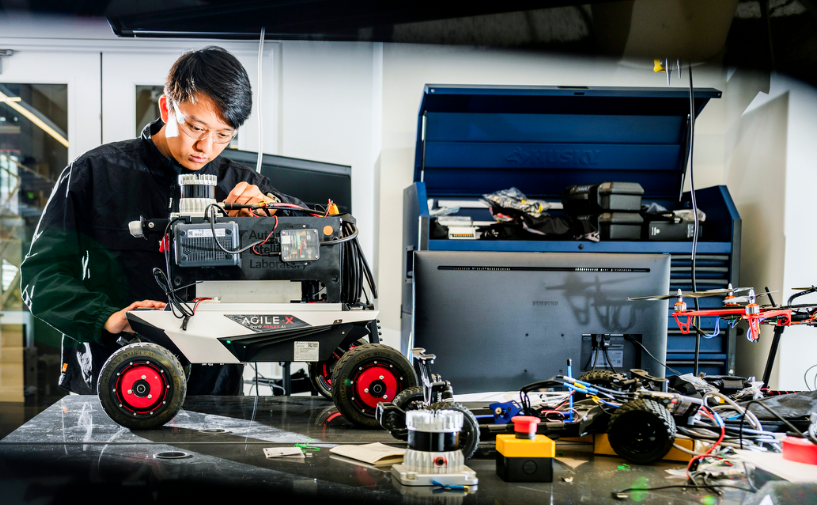

A paper from Northeastern University looks at how to improve the way robots and self-driving systems determine their location and build maps using LiDAR and IMU sensors. LiDAR sensors measure distance by bouncing laser pulses off objects, while IMU sensors track motion using accelerometers and gyroscopes.

So, the paper tackles a common issue in robotics called SLAM, which is, simultaneous localization and mapping. Basically, how can a robot know where it is while it’s still building the map?

The problem is, LiDAR sensors are awesome ’cause they work pretty much anytime and give super accurate depth info. But the catch is, they make a crazy amount of data, and crunching all that in real-time eats up a lot of memory and CPU. That’s alright in a lab, but when you’re out on long trips or using smaller, less powerful gear, it really starts to drag the system down.

Alright, so basically there are two main ways right now. One is to use the whole point cloud, like all the LiDAR data, every time you update where the robot is. It’s really accurate but super heavy on memory. The other way is to grab just some key features, like edges or flat spots, and only work with those. That saves space, but the thing is, one might miss out on important stuff. For instance, they often fail in places where there’s a lot going on, like streets with moving cars or crowded areas with people walking around, because the rules can’t easily tell the difference between permanent structures (like walls) and temporary things (like a passing truck).

This paper presents the introduction of a system designated as DFLIOM, which adopts an alternative approach. Rather than relying on manually selected features, it employs a compact neural network to identify the most salient points for registration, that is, for matching one scan to another and determining the robot’s movement.

The network looks for two kinds of points:

- Salient points: features that tend to persist within the environment over time, such as a building corner that remains identifiable in subsequent scans.

- Unique points: features that serve to distinguish one location from another, such as a mailbox that interrupts an otherwise uniform wall.

By training the network to identify these points, a more refined set of truly useful points is obtained, rather than discarding approximately one-third of the scan based solely on preliminary rules.

Smart Fallback Keeps Accuracy High in Tight Spaces

The cool part is that the system also has a fallback mode. If the robot’s in a tight hallway or small space, where even a few missing points could mess things up, it just uses the full scan instead. That flexibility helps it stay accurate without wasting memory when it doesn’t need to.

Upon receipt of a scan, the data is initially processed to mitigate motion blur using inertial measurement unit (IMU) information. Subsequently, the system determines whether the environment is confined or spacious. Based on this assessment, it either preserves the entire scan or subjects it to a neural network for the selection of optimal points. Finally, the scan is registered against a localized submap constructed from previous scans to accurately determine the current position of the robot.

New System Outperforms DLIO with 50% Less RAM

The system was evaluated using publicly available datasets as well as a self-recorded dataset captured on the Northeastern University campus, encompassing various challenging elements such as moving pedestrians and vehicles. Overall, the proposed system outperformed earlier methods, including DLIO and DLIOM, in terms of both accuracy and memory efficiency. Notably, it achieved a reduction in RAM consumption exceeding 50% relative to DLIOM, while maintaining precise localization.

Also, even though this system uses a learned model, it still runs fast, about 40 milliseconds per frame, which is enough for real-time use at 20 Hz.

They also played around with the salient or unique points by removing them from the real world equation, but then they discovered, to work on an optimum level, both are required. Relying solely on persistent points resulted in difficulties within repetitive environments such as extended hallways. Conversely, utilizing only unique points improved local matching accuracy but compromised long-term stability.

Takeaway

So overall, the takeaway is that rather than relying on traditional heuristic rules for selecting LiDAR features, a compact model was trained to identify the most informative points for scan matching and motion estimation.

It keeps memory use low, runs in real time, and works well even in busy, dynamic environments. Thus, paving the way for smarter, more efficient navigation that adapts across environments and runs well even on resource-limited platforms.

Source: Northeastern University